Many of our space programs rely on customized image processing to produce the intended product. As such, we have developed an expertise in applying advanced imaging processing methods to achieve, and often surpass, mission goals. Here are a few examples:

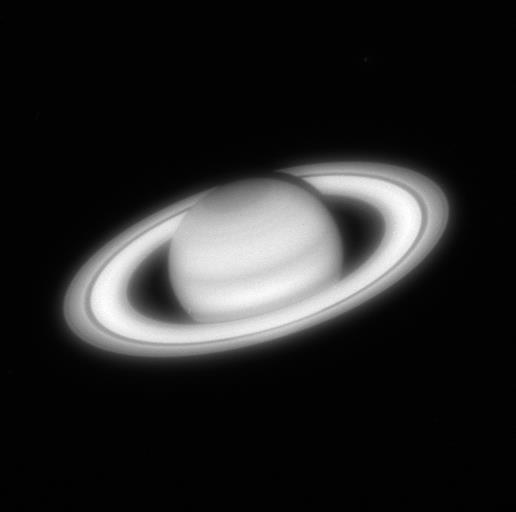

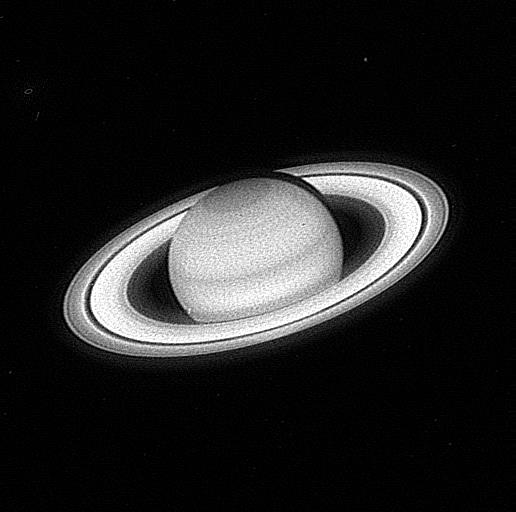

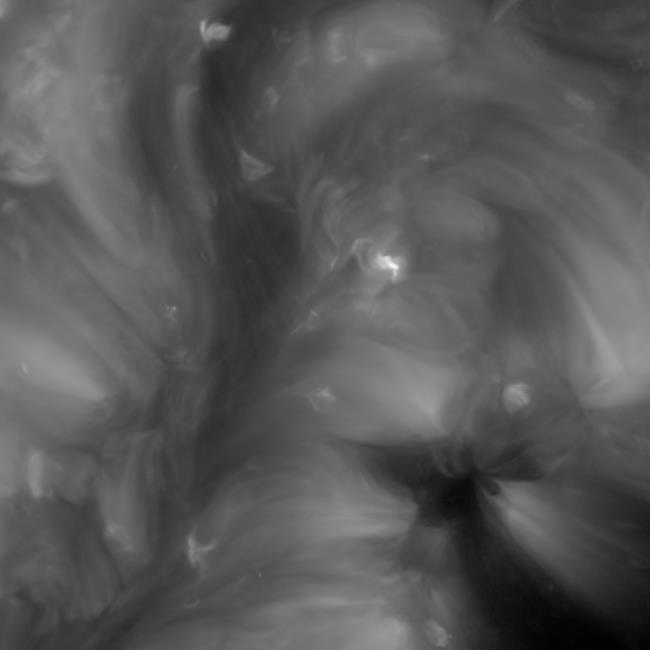

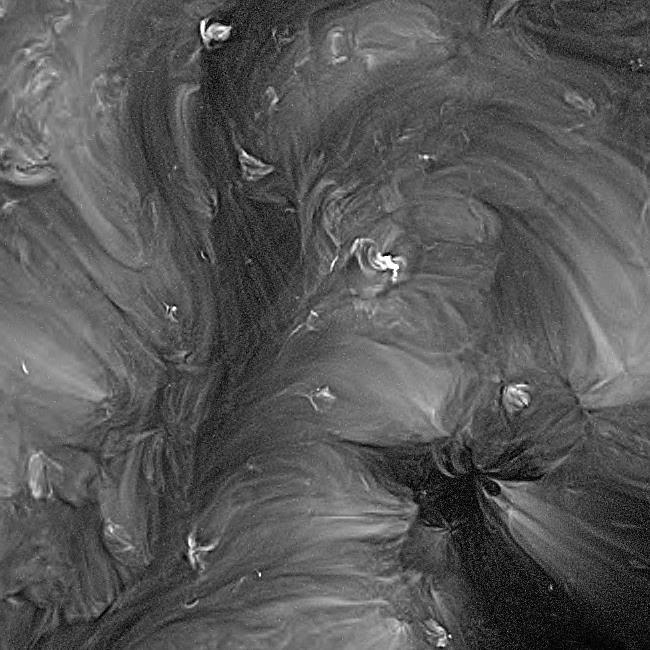

During our support of a sparse aperture imaging program, a method was needed to remove complicated blur functions from video sequences. The use calculated and/or measured blur functions (also called point spread functions) were insufficient to produce an end product without large artifacts. The measurement process was also very time consuming. Existing blind deconvolution methods (finding the PSF without measuring or modellling) required large amounts of computation and put constraints on the type of blur that could be removed. We found a solution in the Fourier domain. Dr. Caron developed an algorithm,

SeDDaRA (Caron, J. N., et al. Optics Letters 26.15 (2001): 1164-1166.), that extracted the PSF by comparing the spatial frequencies of the blurred image with the spatial frequencies of a similar, but not blurred, image. A pseudo-inverse filter extracts the PSF from the image, producing a clear artifact-free result in a few seconds of computation.

pdf